[Adobe Stock]

[Adobe Stock]

Elon Musk and a trio of senior xAI employees introduced the newest iteration of his AI chatbot, Grok 3, which the company hails as “the smartest AI on Earth.” In a live video on X (formerly Twitter), Musk stressed how quickly Grok 3 had leapt ahead of its predecessor:

“We’re very excited to present Grok 3, which is an order of magnitude more capable than Grok 2 in a very short period of time,” Musk said. “Our team’s been working extremely hard over the last few months to improve Grok as much as we can, so we can give all of you access to it.”

Musk—joined by xAI Chief Engineer Igor Babuschkin, as well as co-founders Yuhuai (Tony) Wu (a former research scientist at Google and Stanford) and Jimmy Ba (an assistant professor at the University of Toronto)—touted Grok 3’s problem-solving strengths, saying it can tackle “complex physics, advanced mathematics, and coding tasks in ways that would normally take people hours.” The model, he added, is “continuously improving on a daily basis,” which Musk credits to xAI’s new super-sized data center.

A version of the large language model on lmarena.com, a where users can vote on anonymous chatbot models, jumped to the top of the rankings, edging out some DeepSeek R1 and the some of the latest models from Google and OpenAI.

[https://lmarena.ai/]

[https://lmarena.ai/]

Results for the initial Chocolate version of Grok 3 saw it become the first-ever AI model to achieve a score of 1,400.

Accessing Grok: The role of Grok.com

According to Musk and the xAI team’s, grok.com is the primary web portal for accessing Grok. While there is also an option to use Grok within X through an X Premium+ subscription, the web version at grok.com generally receives the most up-to-date features first. This is partly because mobile apps and in-app versions must go through app store approvals, causing potential delays. As a result, grok.com will offer more advanced functionality, including some emerging tools that might not appear on mobile for several weeks.

A gigantic GPU cluster expansion

Initially built in about 122 days last year with 100,000 GPUs, xAI’s cluster was then doubled in a second construction phase—reaching roughly 200,000 GPUs total—making it, in Musk’s words, “one of the largest fully connected H100 clusters of its kind.”

From the start, xAI established its data center—nicknamed “Colossus”—inside a repurposed Electrolux factory in Memphis, Tennessee. The team quickly added power capacity by tapping temporary generators, Tesla MegaPacks to buffer surges, and a novel liquid-cooling setup designed for 100,000 – 200,000 GPUs. Along the way, they encountered frequent debugging needs—like mismatched BIOS firmware, cable issues, and occasional transistor flips caused by cosmic rays—yet pushed the facility online at record speed.

Earlier, Musk boasted that Grok 3 bested rivals including ChatGPT, the latest models from Google’s DeepMind Gemini, and others. Speaking via video at the World Government Summit in Dubai on February 13, Musk called Grok 3 “scary smart” and “very powerful in reasoning…It comes up with solutions you wouldn’t even anticipate — non-obvious solutions.” He also repeated his description of it as “the smartest AI on Earth.”

“Now might be the last time that any AI is better than Grok.”

–Musk, in a reference to OpenAI and other rivals on February 13

So far, Musk’s claims hinge on xAI’s internal testing. Skepticism intensified after former xAI engineer Benjamin De Kraker posted a coding-focused AI ranking on X placing Grok 3 below OpenAI’s top models. The post reportedly led to a dispute with xAI management, ultimately resulting in De Kraker’s resignation. Similarly, a Reddit discussion on r/singularity examined xAI’s self-reported Grok 3 benchmarks, noting that some comparisons used older or incomplete competitor data. Beyond benchmark concerns, the Reddit conversation explored the feasibility of Musk’s claim of “continuous daily improvement.” Many users expressed doubt that this implied full daily retraining of a large model like Grok 3. Instead, commenters proposed alternative interpretations, suggesting Musk might be referring to continued pre-training on new data, or potentially more targeted fine-tuning. Some even speculated about more efficient methods like test-time learning as a way to achieve ongoing improvement without resource-intensive daily retraining.

Training at “Colossus”

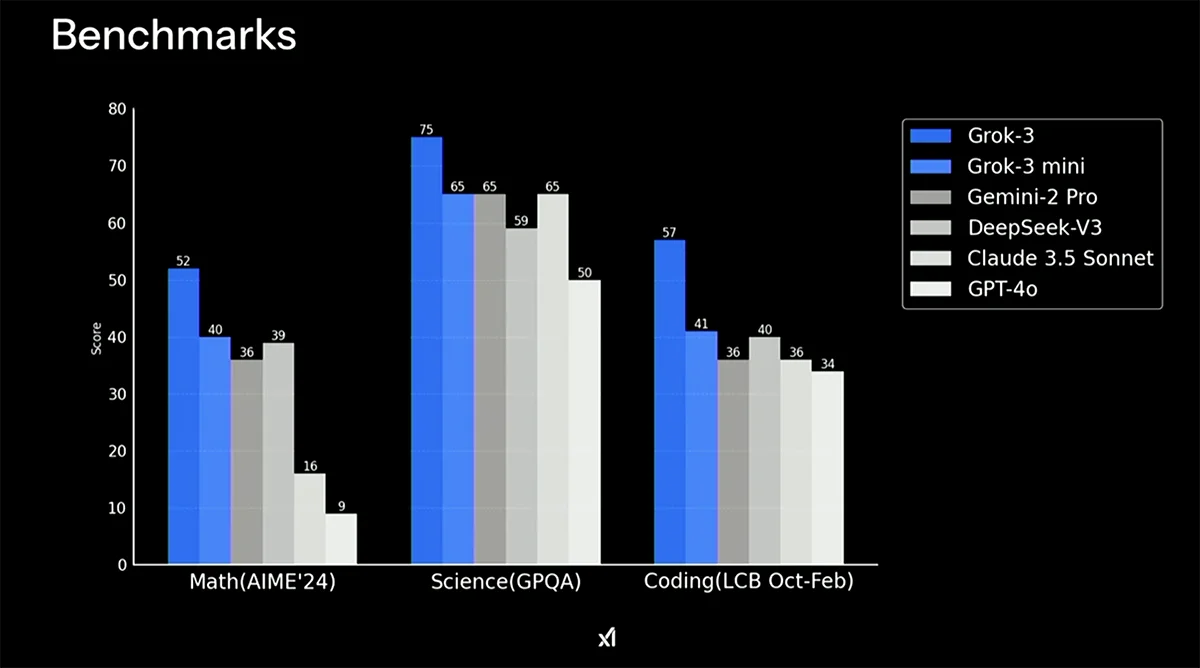

High-level benchmark comparisons for math, science, and coding [xAI]

High-level benchmark comparisons for math, science, and coding [xAI]

In any event, Grok 3 was trained on a massive amount of compute at xAI’s “Colossus” supercomputer. Built in eight months, Colossus is said to be ten times more powerful than xAI’s previous supercomputer (Musk said the real value could be closer to 15x). Grok 3 reportedly consumed around 200 million GPU-hours, dwarfing many peers:

- GPT-3 (175B parameters): roughly 3 million GPU hours on Nvidia V100.

- Meta’s Llama 3.1 (405B): roughly 31 million GPU hours using Nvidia H100‑80GB.

- DeepSeek V3 (671B): roughly 2.8 million GPU hours on Nvidia H800.

(Source: Wikipedia)

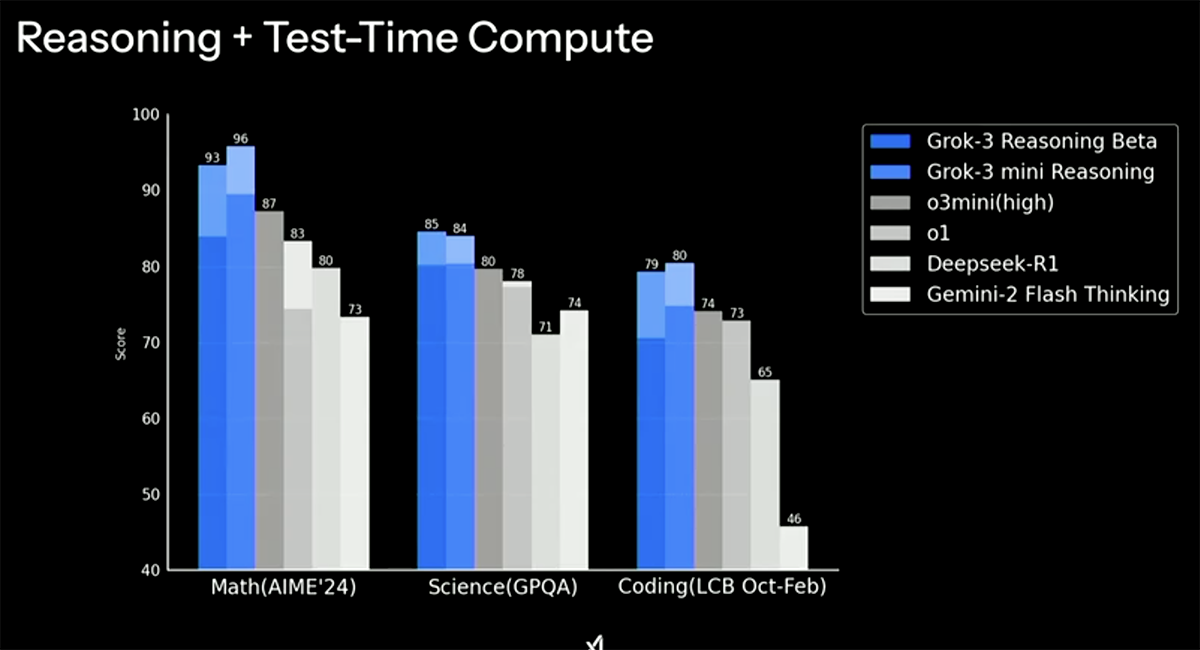

Performance when using enhanced reasoning or increased compute [xAI]

Performance when using enhanced reasoning or increased compute [xAI]

Self-improvement features and training methods

Grok 3’s self-described unique trait is an ability to improve itself. According to Musk, the model monitors its own outputs for accuracy, “reflects on the data,” and self-corrects any misinformation—an approach xAI believes will reduce AI “hallucinations.” This “self-correcting mechanism” supposedly sets Grok 3 apart from GPT‑4 and Anthropic’s Claude, which rely on periodic updates rather than live self-adjustment.

“Digital intelligence will be more than 99% of all intelligence in the future.”

–Musk

Another differentiator is synthetic data training, intended to avoid legal entanglements over web-scraped data and to emphasize logical consistency. In addition, xAI says Grok 3 uses reinforcement learning to refine its performance through iterative feedback. Musk claims in a video posted by Bloomberg that synthetic data, combined with self-correction and reinforcement learning, gives Grok 3 superior reasoning:

“If it has data that is wrong, it will actually reflect on that and remove the data that is wrong. Even without fine-tuning, Grok 3, the base model, is better than Grok 2.”

Maximally truth-seeking: In the presentation, Musk and the xAI team repeatedly emphasize Grok 3’s core philosophy of pursuing truth as rigorously as possible, invoking the term “grok” (from Heinlein’s Stranger in a Strange Land) to represent deep understanding. Musk has said, “You must absolutely, rigorously pursue truth… or you will be suffering from delusion or error.” xAI positions this principle as central to Grok 3’s design.

Future hardware: Blackwell GB200s on the way

Musk is reportedly planning a new data center to further boost xAI’s GPU cluster, according to a report by The Information. A deal with Dell Technologies—potentially worth over $5 billion—would provide AI-optimized servers containing Nvidia Blackwell GB200 GPUs, as detailed by Bloomberg. Delivery is expected this year, building on xAI’s Memphis supercomputer project, which already employs a combination of Dell and Super Micro servers.

During the same discussion, Musk revealed that xAI’s next data center is expected to be roughly five times larger in terms of power draw, scaling from 0.25 gigawatts to about 1.2 gigawatts. The plan includes future Nvidia Blackwell GB200—or potentially GB300—GPUs, indicating xAI’s intention to continue expanding its large-scale compute infrastructure well beyond the current footprint.

Comparison of GPU features mentioned in this article: H100 (SKY-TESL-H100), H800 (SKY-TESL-H800), H200 (HPC Datasheet), Blackwell GB200 Feature H100 (SKY-TESL-H100) H800 (SKY-TESL-H800) H200 (HPC Datasheet) Blackwell GB200 Architecture Hopper Hopper Enhanced Hopper Blackwell Memory Capacity 80 GB HBM2e 80 GB HBM2e 141 GB HBM3e 180 GB HBM3e per GPU Memory Bandwidth 2 TB/s 2 TB/s 4.8 TB/s 8 TB/s per GPU FP8 Performance 3,958 TFLOPS (Sparsity) 3,958 TFLOPS (Sparsity) 3,341–3,958 TFLOPS (SXM/NVL) 20 PFLOPS (FP4) FP64 Performance 24 TFLOPS 0.8 TFLOPS 30–34 TFLOPS 40 TFLOPS per GPU Power Consumption 350W (PCIe) 350W (PCIe) 600W (NVL) / 700W (SXM) 1,200W/rack (NVL72 liquid) Key AI Features – 4th-gen Tensor Cores – MIG (7 instances)

– 600GB/s NVLink

– 4th-gen Tensor Cores – MIG (7 instances)

– 400GB/s NVLink

– 900GB/s NVLink – 50% TCO reduction

– HBM3e memory

– 2nd-gen Transformer Engine – 1.8TB/s NVLink 5.0 – Dedicated decompression engine

– RAS engine

The evolving beta nature of Grok 3

Musk and his colleagues regularly remind users that Grok 3 remains in beta. They emphasize that new capabilities—such as improved conversational memory or advanced modules for research—will roll out incrementally. Musk stated that, “It’s still being refined, and we expect it to improve literally every day,” highlighting how quickly the model and its feature set can adapt.

The Grok 3 “family” of models

xAI stresses that Grok 3 is not just one monolithic model. In fact, there are multiple variants:

- Grok 3 (the main flagship model)

- Grok 3 mini (optimized for speed, though potentially less accurate)

- Grok 3 Reasoning and Grok 3 mini Reasoning (focused on more complex “thinking through” of math, science, and programming problems)

Additionally, within the iOS/web Grok app, users can select “Think” or “Big Brain” modes for deeper analysis if needed.

“Big Brain” mode is another noteworthy feature. According to Musk, it allows Grok 3 to integrate multiple concepts—such as blending different game ideas (e.g., Tetris plus Bejeweled)—and generate entirely new structures or frameworks. The team did a brief demo of the resulting game in the video announcement on X. The team also described plans for a single integrated “speech + comprehension” system to facilitate genuine voice interaction, rather than merely layering text-to-speech on top.

New features: DeepSearch and voice mode

During the announcement, Musk and his team unveiled two notable new features, including DeepSearch, which is xAI’s answer to “deep research” tools that OpenAI has recently made available to some subscribers. In this feature, Grok 3 can scan the internet and X for relevant information, then deliver an abstract summarizing its findings. In addition, voice mode is slated for release about a week after Grok 3’s launch, this feature adds a synthesized voice, allowing users to interact with the chatbot in spoken form rather than (or in addition to) text.

Grok as a personal assistant: Grok 3 can be used across diverse tasks—essentially acting like “an infinite number of interns.” Whether it’s code generation, daily research inquiries, or gaming strategies, xAI envisions Grok 3 as a constant companion to help users complete a wide range of projects.

xAI has already begun rolling out Grok 3:

- X Premium+: Users at this subscription tier enjoy priority access to Grok 3.

- SuperGrok: Priced at $30/month (or $300/year), it offers extra “reasoning” queries, expanded DeepSearch allowances, and unlimited image generation.

Future open-sourcing plans

Musk has also signaled xAI’s intention to open-source previous versions once a newer model is fully stable. While Grok 3 itself may not be open-sourced in the near term, the company still plans to release Grok 2 under an open-source license, continuing xAI’s custom of moving older models into the public domain once they have been superseded.